In this article I want to talk about the CMS publishing process and how we were able to increase its performance and therefore minimize the time our customers have to wait for new content.

The publishing process in the Enterprise CMS Gentics Content.Node is responsible for preparing all objects (pages, files and folders) for use on your website or in your intranet. This roughly includes rendering of the actual content in combination with the template provided, resolving and preparing all properties (Tagmap entries) and writing everything to the selected destination (i.e. a Content Repository). If you are interested in the technical details of the publishing process, I suggest you have a look at this article (http://www.gentics.com/Content.Node/guides/faq_publish_process.html) describing it in detail.

There are two different modes that need to be mentioned.

- Publishing into the file system for use in a static environment

- Publishing into a content repository for use in a dynamic environment

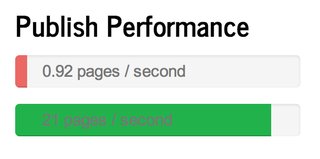

Publishing performance has always been a hot topic. In this article I want to talk about bringing your objects into the Gentics Content Repository and how we were able to improve the speed of this process. Our customers want to get their pages online fast and they don’t want to wait for a publishing process to take a very long time. This especially holds true when editors re-work a navigation area containing a high page count with lots of dependencies or when deploying a template or implementation changes. In big projects of 30.000 pages and up, such an editing operation often results in a few thousand pages being marked for publishing. Such a publishing process previously took too long, keeping in mind that we were able to publish ~0.92 pages / second depending on the database used for the content repository and the complexity of the implementation.

The customer I want to talk about had a project containing ~36.000 pages and this project was scheduled for a relaunch. In this relaunch 51 new sub-customers were to be migrated to use this project. They also needed to be able to view those 36.000 pages, edit derivatives of those pages and create their own local pages. Using our multichannelling feature, this complicated requirement could easily be achieved with the only drawback of multiplying the objects in the content management system by 52 (1 Master and 51 Channels), resulting in 1.9 mio pages. When we now take the 0.92 pages / second and extrapolate for a target of 1.9 mio pages, we would have to wait an eternity for a so-called full publishing process where all attributes would be re-rendered and the changed ones would be written to the desired destination. Waiting such a long time for a deployment would cripple the daily work of the editors and therefore be unacceptable.

Up to now an error in the publishing process would immediately terminate it and all pages would be re-published with the next run. As long running publishing runs are more prone to errors (DB connection loss, power loss, faulty pages,…), our development team introduced a new feature “resumable_publish_process”, that will mark published pages as published and on an interrupted publishing run will only re-publish pages, that have not been completely published before the error/interrupt occurred.

Our engineers were committed to reduce the publishing time, to make such a deployment possible on one weekend. This is where the hard work began. We started out by moving the Gentics Content.Node installation to the desired hardware with the following configuration:

CPU: 4 Cores (~2.4 GHz)

Memory: 32 GB

HDD: 100 GB

NFS: 100 GB

The biggest share of the system memory was used up by the MySQL (~18GB), another 8GBs were used by the JVM and the remaining 6GB were distributed between the apache web server and the OS cache. The targeted content repository was located on an Oracle cluster. We configured the multithreaded publishing engine to use 3 of the 4 cores available and started the first publishing process. We were not surprised that just throwing hardware at this problem had little to no effect.

Since databases are usually not designed to hold binary data and in our case a ridiculously large amount of binary data, we decided to write those attributes to the file system instead. This is what the 100GBs of NFS are for. Taking this measure significantly reduced the content repository size and increased the performance. At this point we were able to publish the 1.9 mio pages at a rate of 2.6 pages / second. Increasing the amount of CPU cores to 8 and doubling the cores used by the multithreaded publishing engine did not really give us any benefits here, since most of our threads were in a locked state while one thread was waiting for I/O.

After further analysing the logs, we found that there was an unnecessary large amount of SQL statements made to retrieve the dependencies for each page that needed to be rendered. Combining those statements to batches of 100 pages increased the performance to ~4 pages / second. The logs then suggested that writing the data to the Gentics Content Repository was now the bottleneck and therefore our next analysation target.

Our brave engineers also combined the statements interacting with the Content Repository into batches using JDBC batched inserts and updates. To be able to use this technique, the publishing process had to be refactored in order to support inserting batches with a single transaction. Now being stuck at around 10 pages / second, we had to go back to the drawing board and search for the remaining performance hogs. A few publishes and thread dumps later, we found that our publishing threads still spent a lot of time in a waiting state, trying to read the actual page data from the MySQL. Not being able to further reduce the statements, our engineers had to think of something else and came up with a publishing cache feature, where the raw data of every page would be cached. After further tests we could finally sit back and watch an astonishing 21 pages / second publishing run.

These 90.000 pages / hour or 1.9 mio pages in 25 hours not only enable deployments with a full-publish run within one weekend but also leave room for a second run in case of an error in the deployed code.

All the improvements will be available with the feature release “Ash”, which will be released on Monday the 17th of June 2014.

0 Kommentare

An der Diskussion teilnehmenKeine Kommentare vorhanden

Kommentar hinterlassen